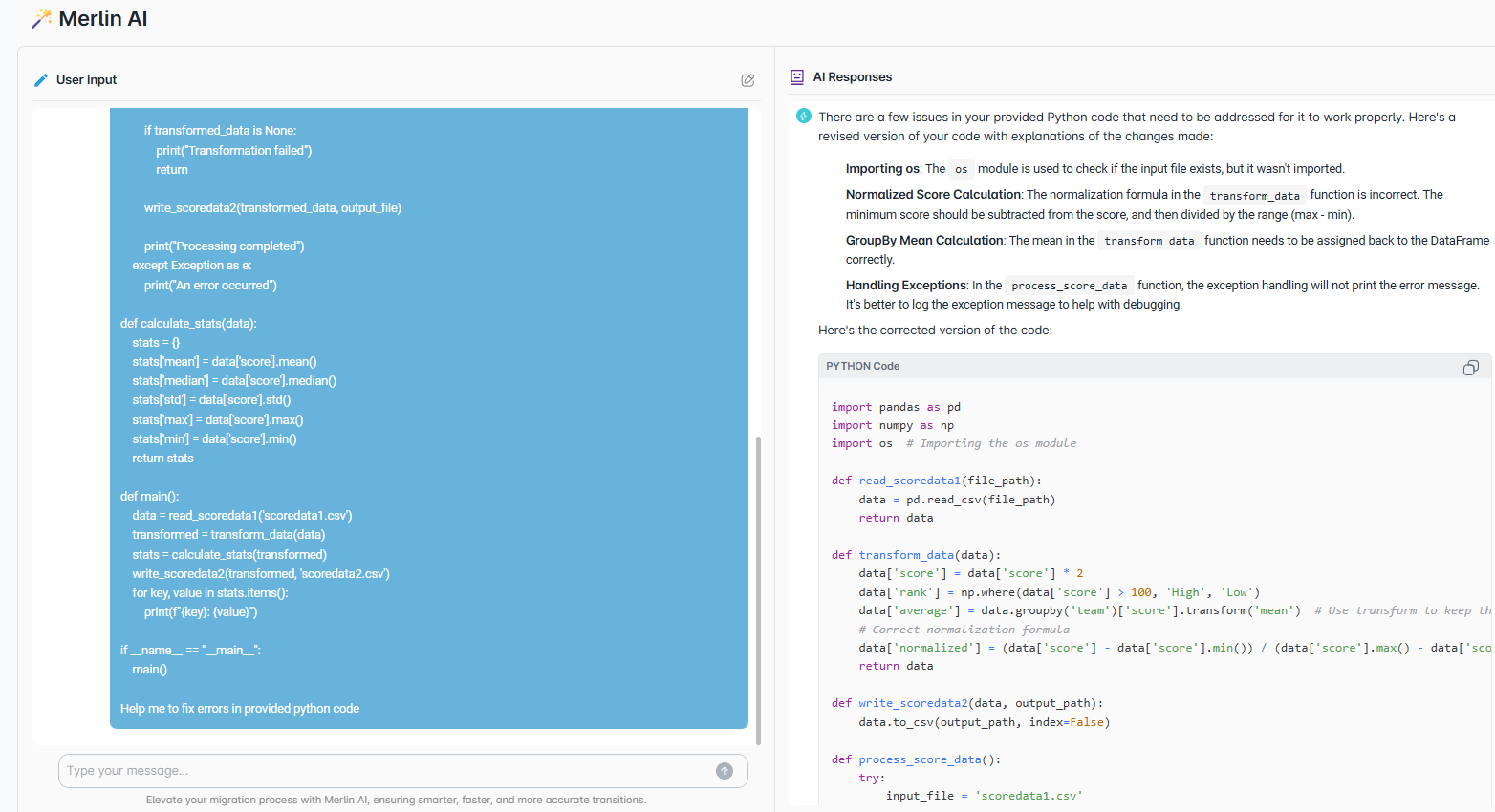

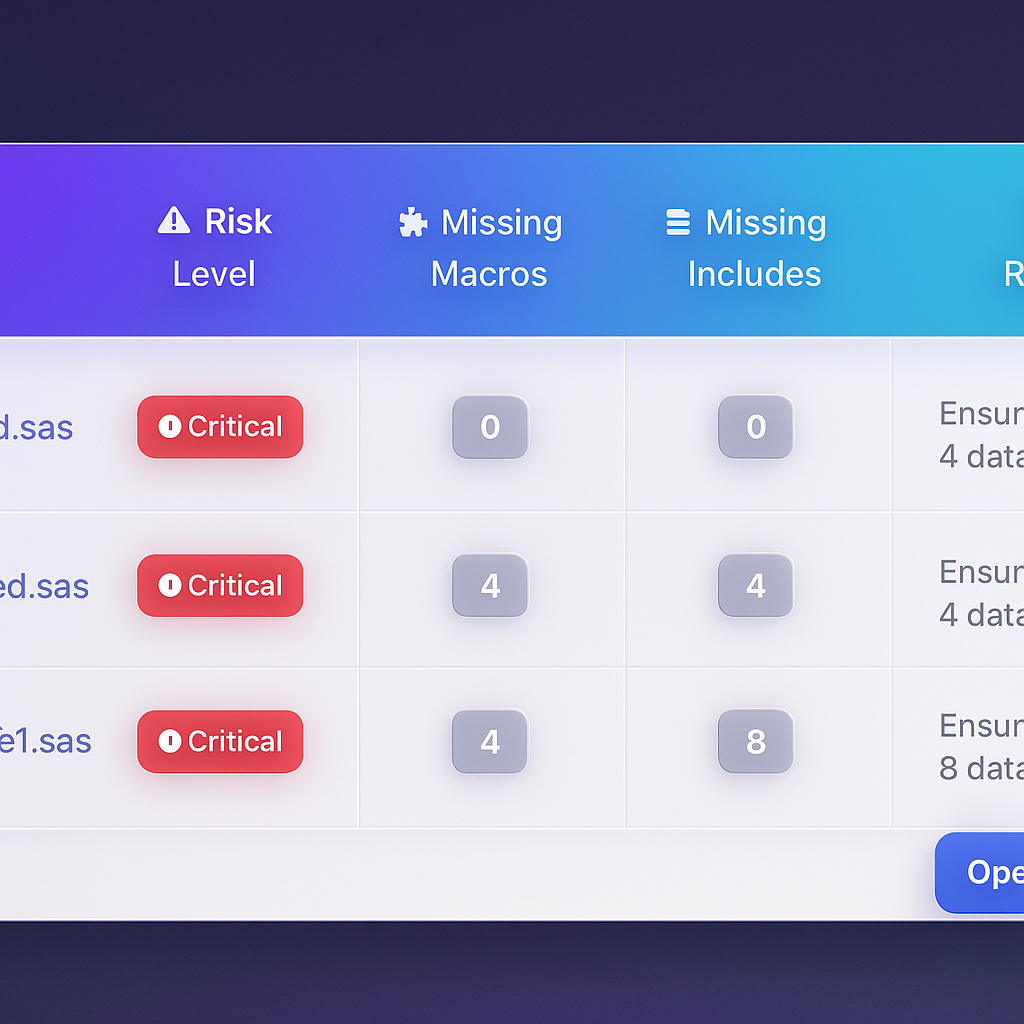

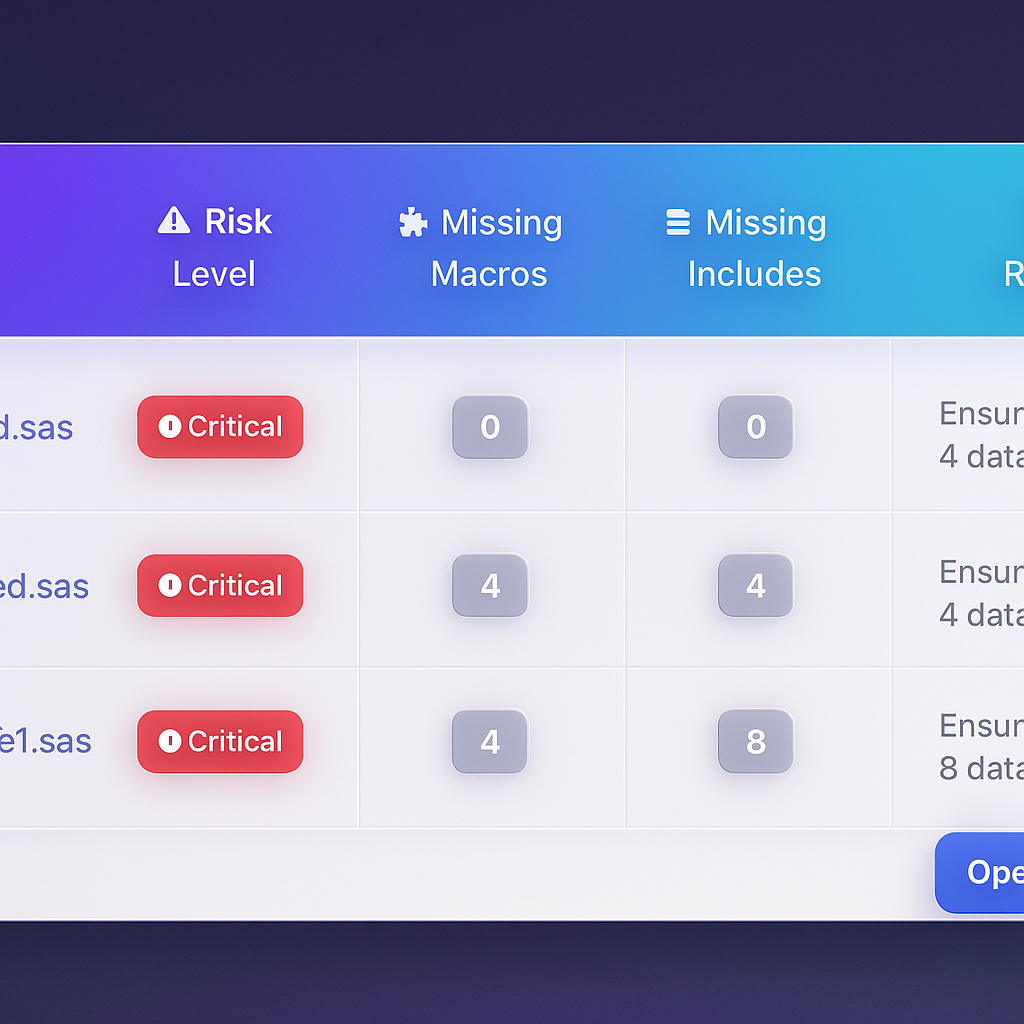

Code Analysis

Quickly assess thousands of scripts, map complexity and dependencies, and flag readiness. Get clear scope, a prioritized plan, safer cutovers, and faster production.

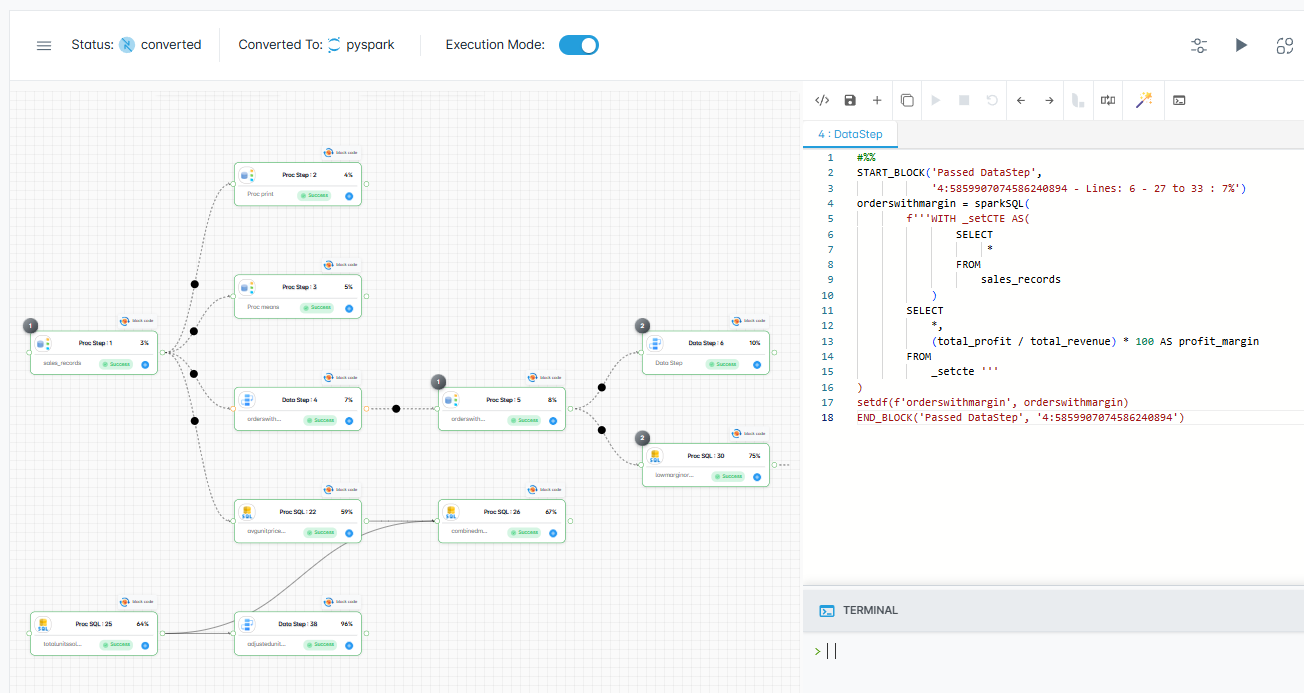

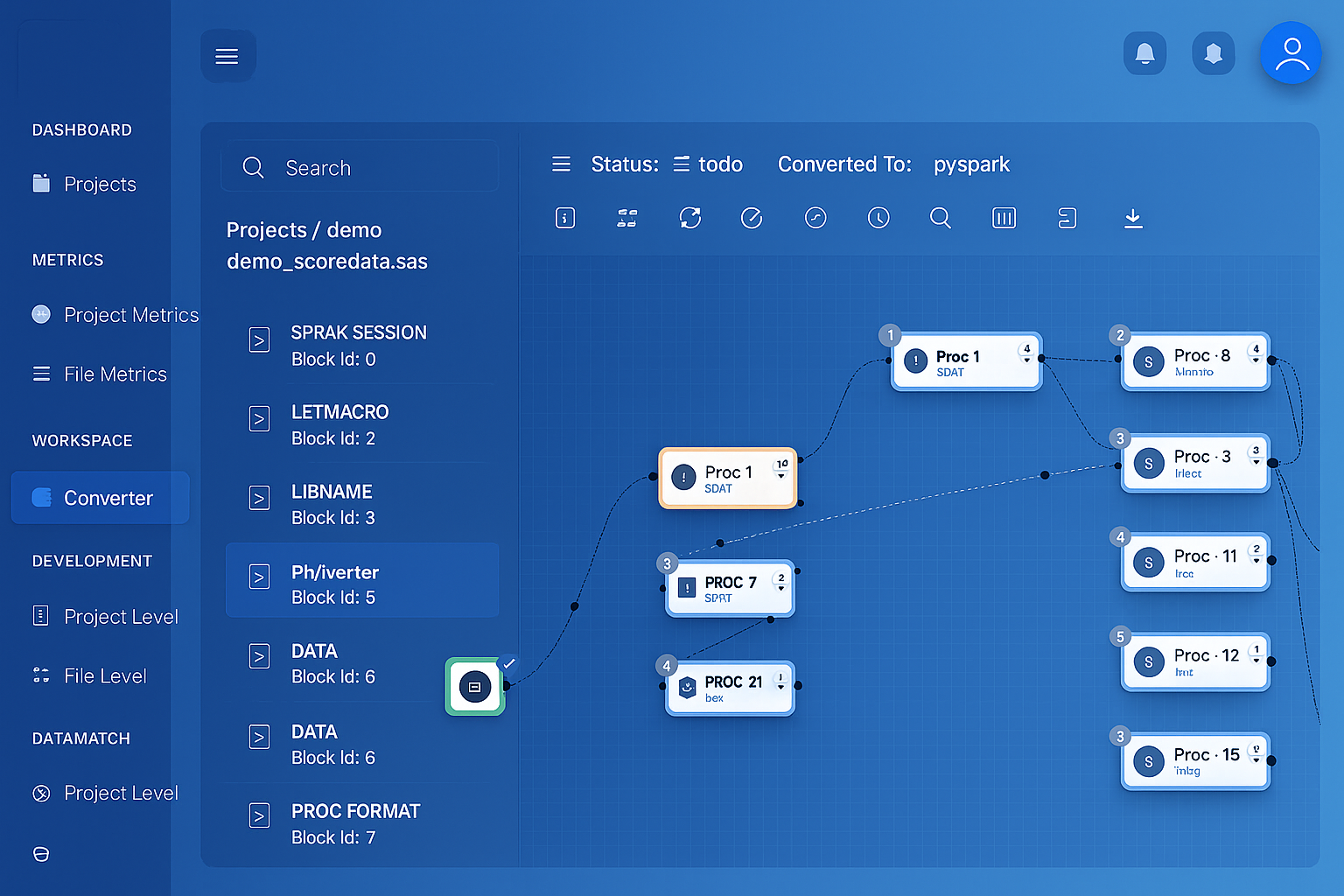

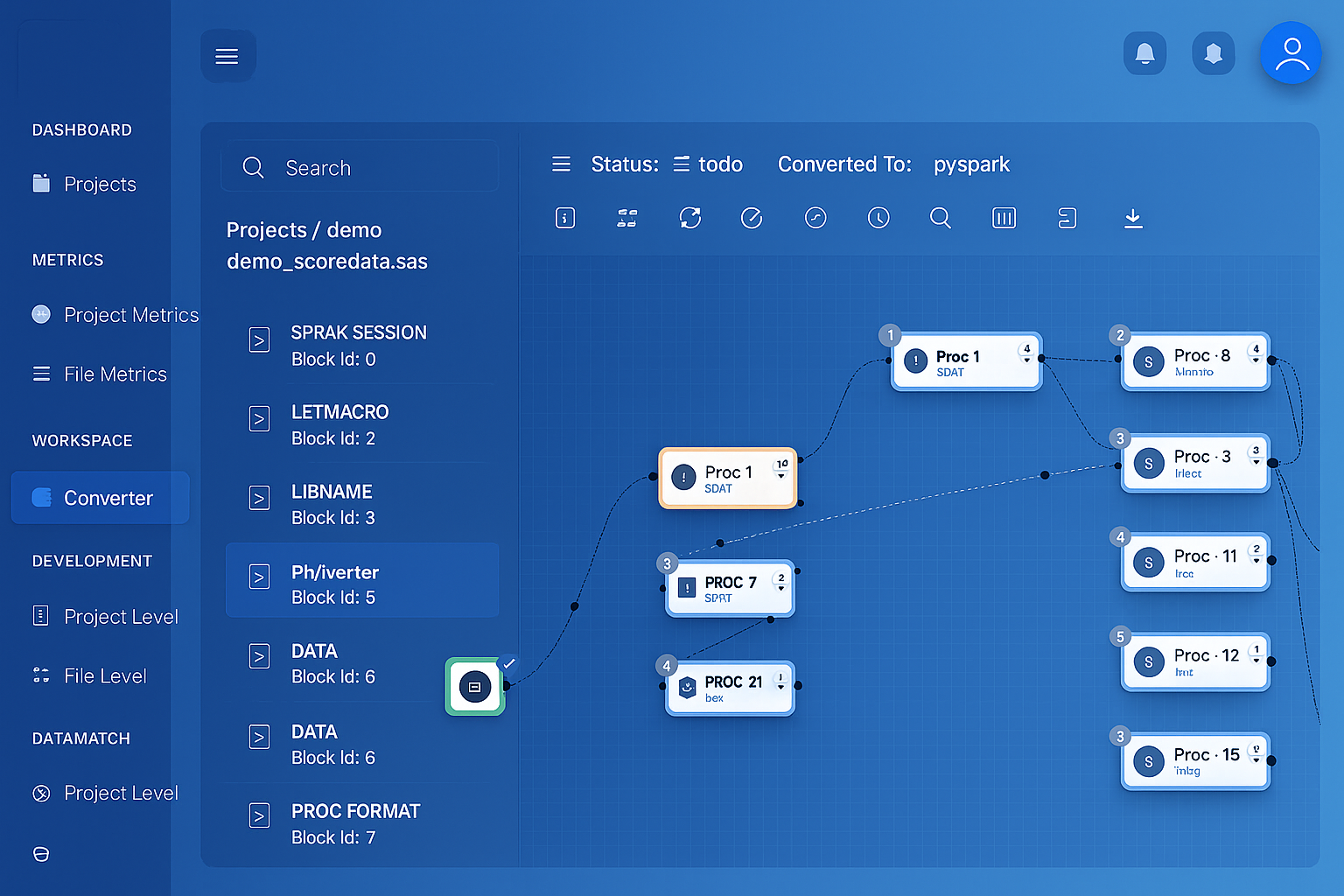

Visual Lineage

Visualize code across jobs, tables, and SQL to see sources, flows, and changes. Speeds impact checks, lowers migration risk, supports audits, and proves outputs match.

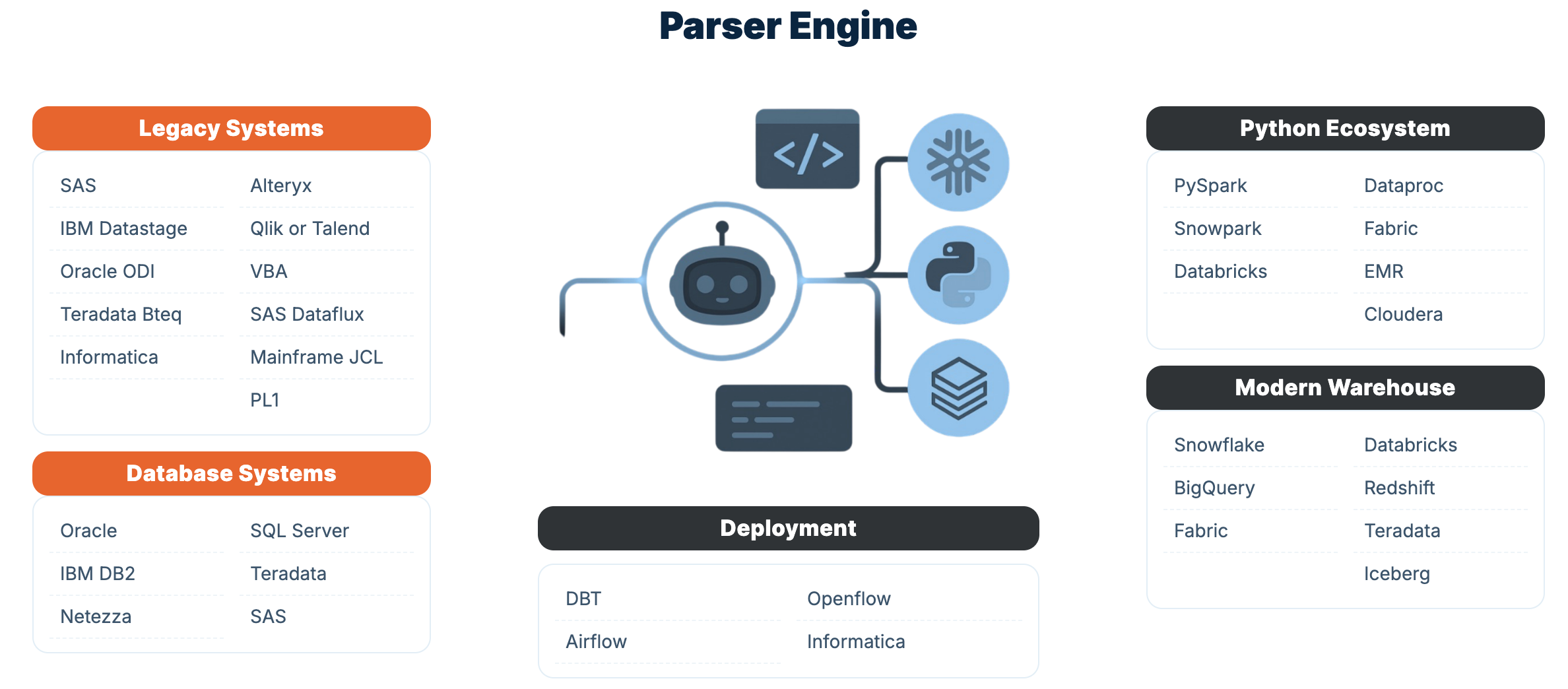

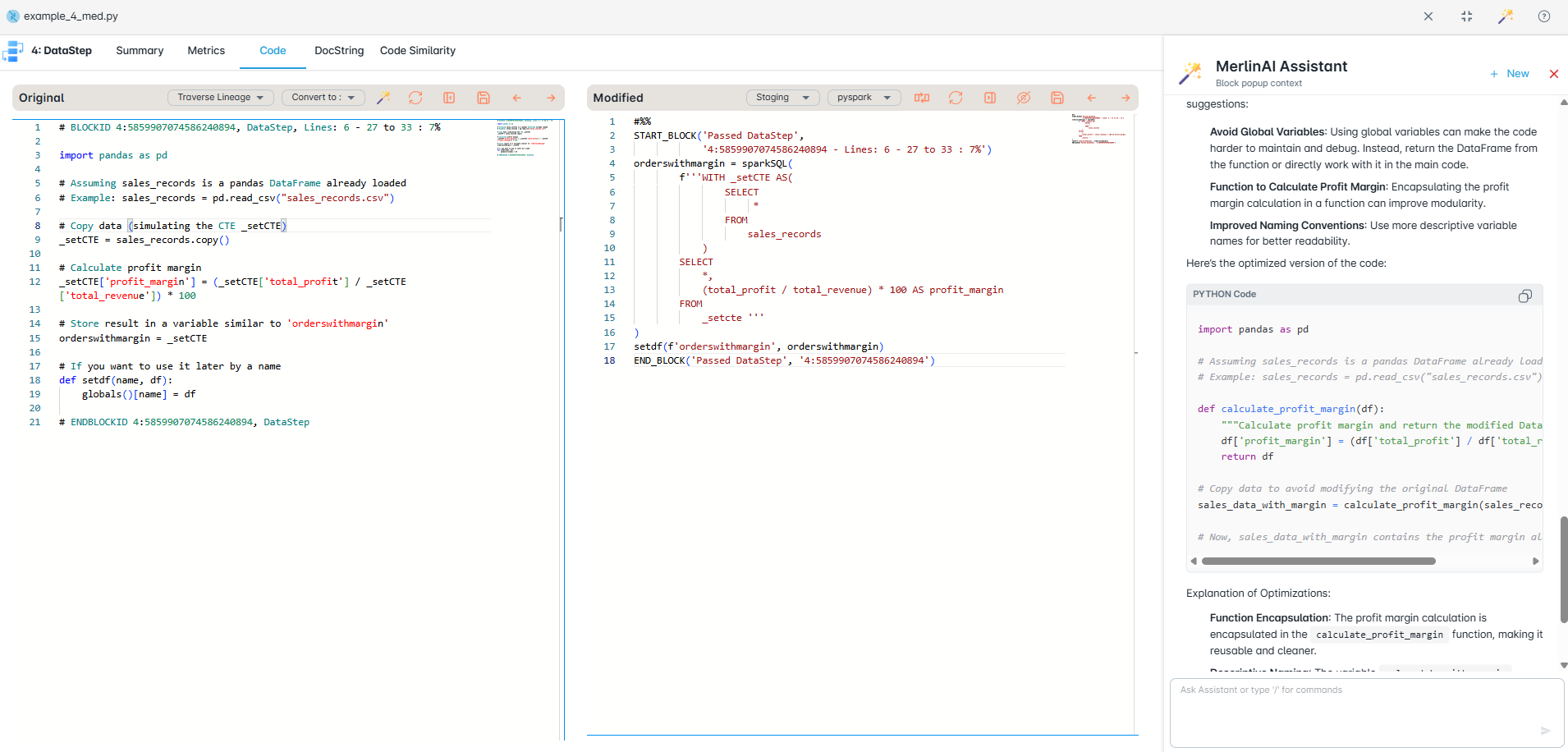

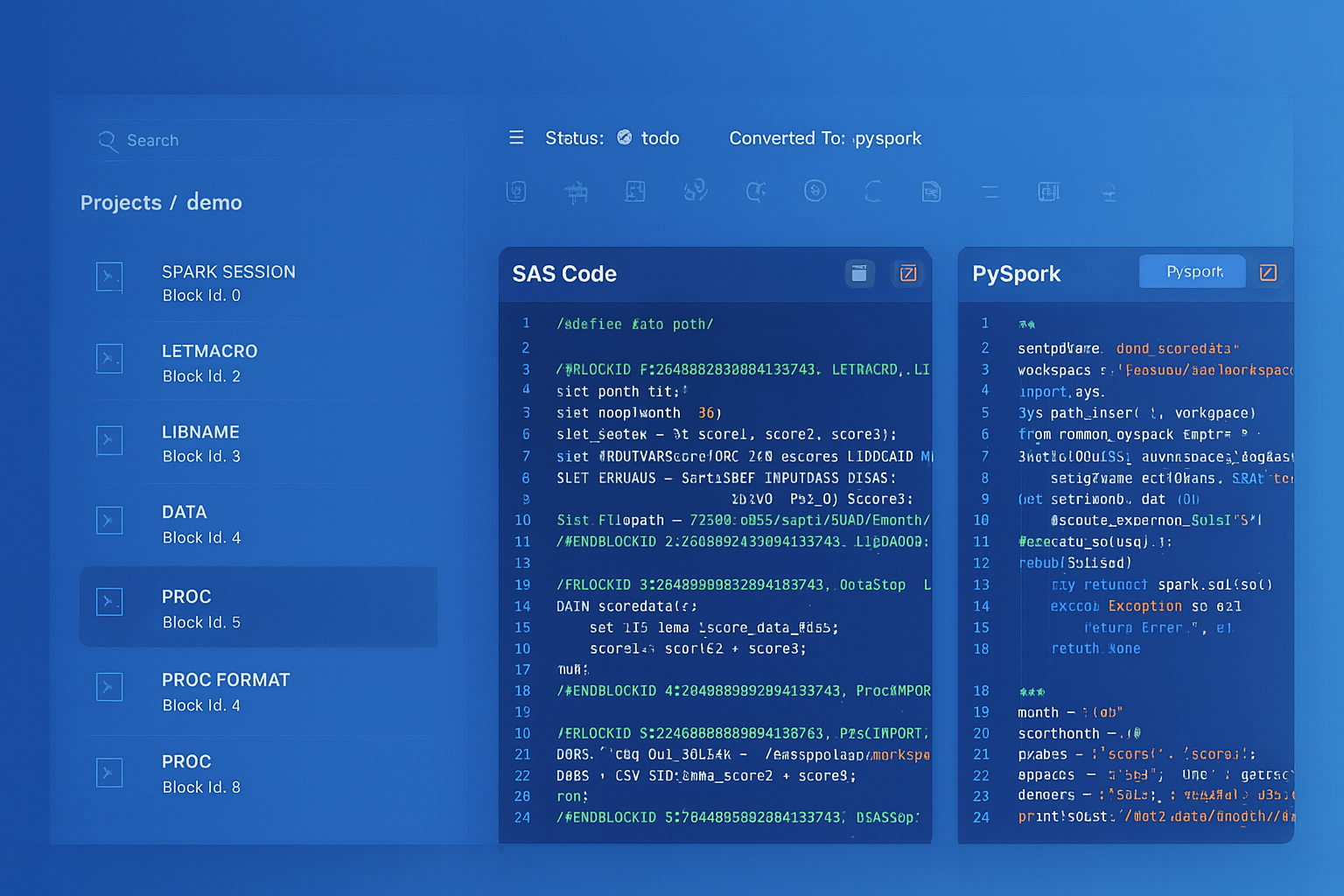

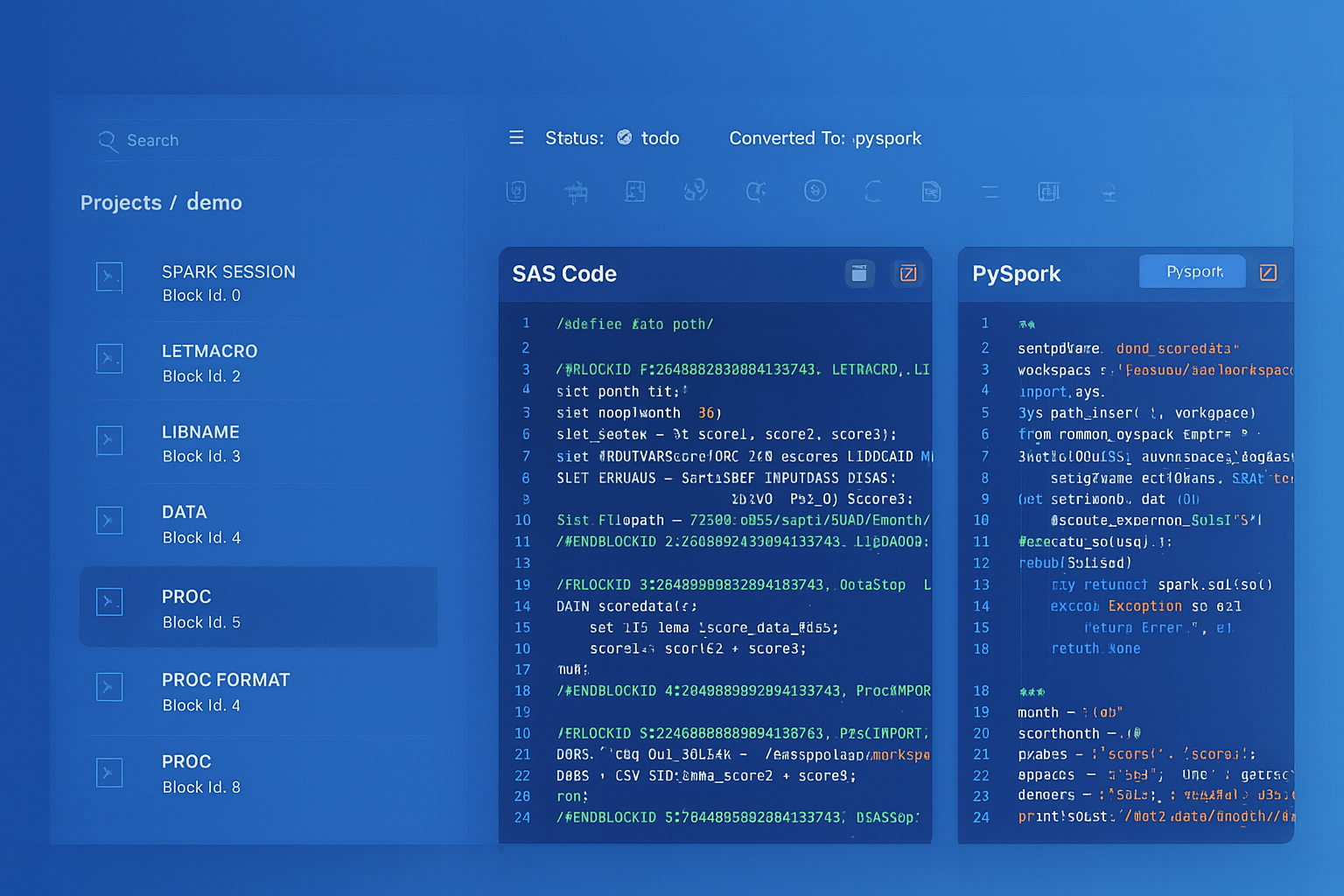

Code Conversion

Convert legacy SAS, DataStage, BTEQ, and more into Python, PySpark, Snowpark, or SQL with matched outputs. Modernize faster, keep logic intact, and avoid risky rewrites.

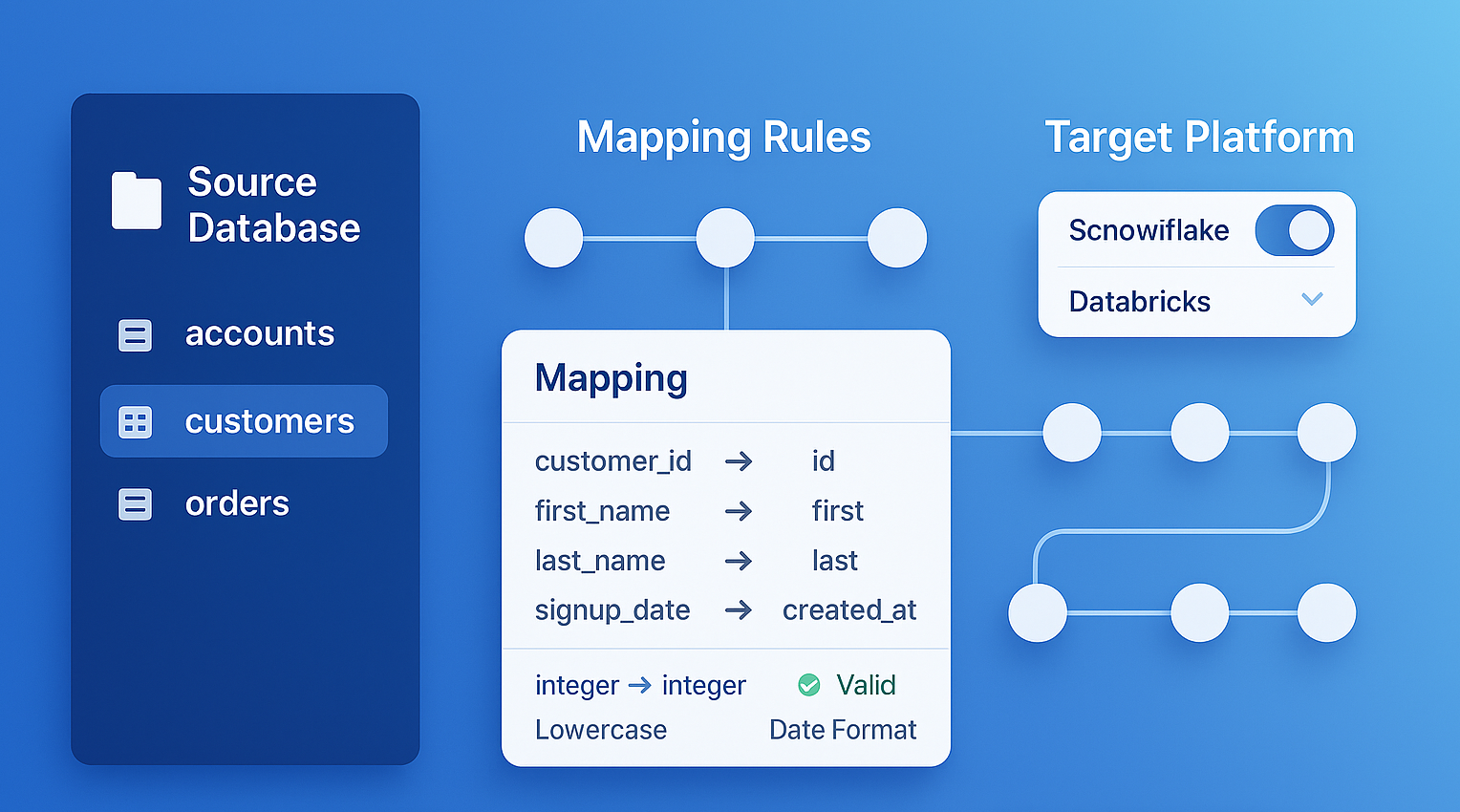

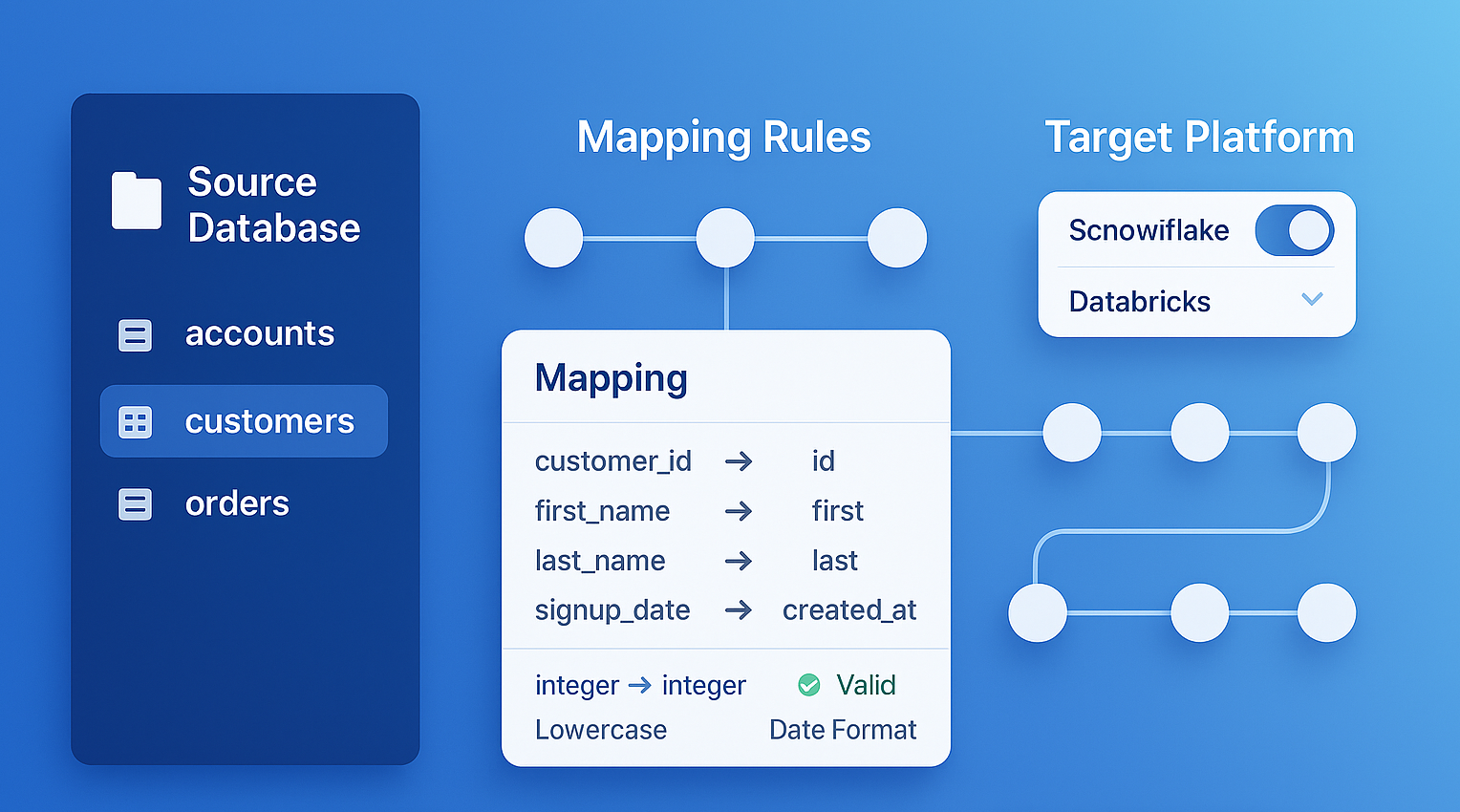

Data Mapper

Automatically map legacy schemas to Snowflake or Databricks with clear mappings. Cut migration risk, enforce naming and data types, and get audit-ready visibility.

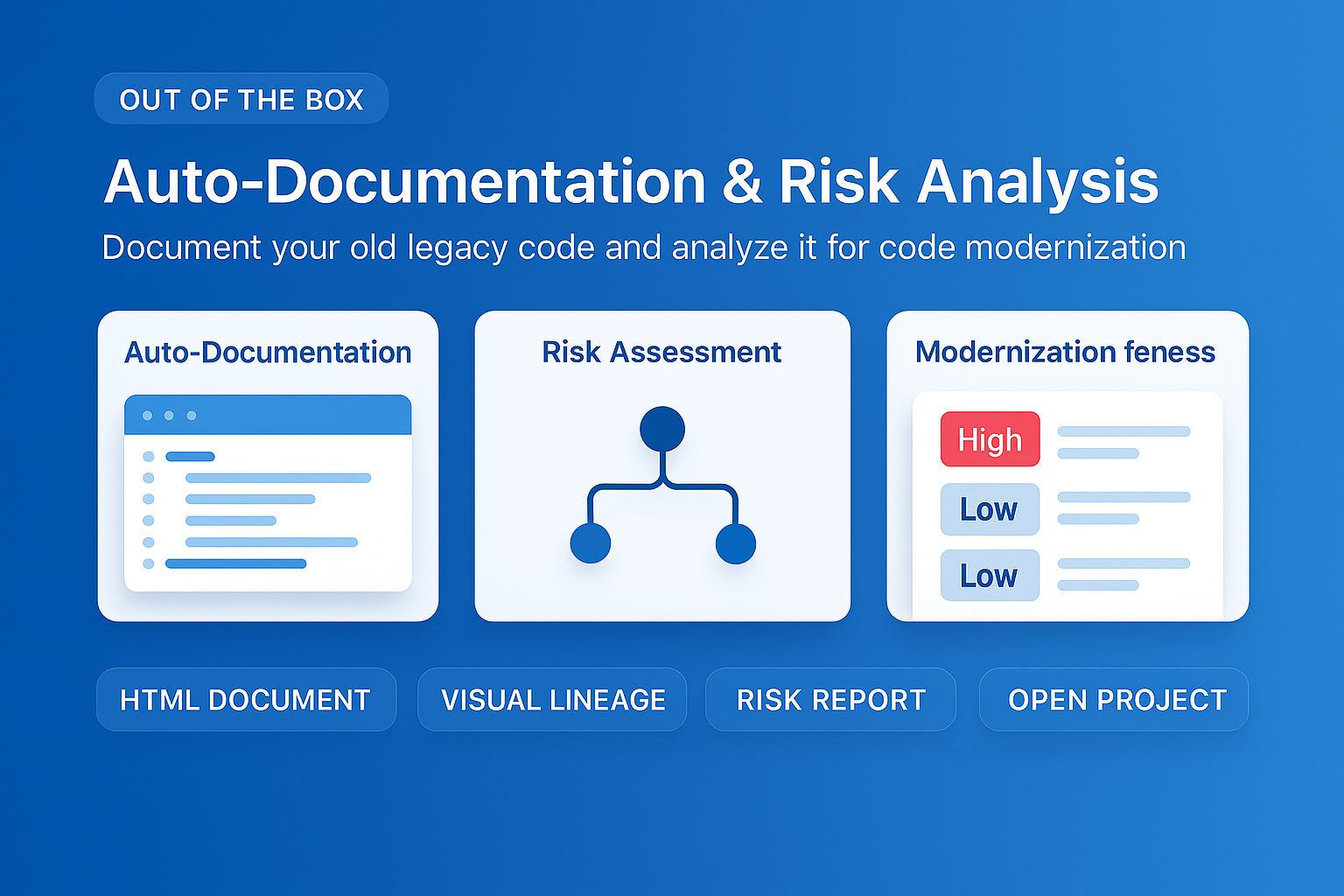

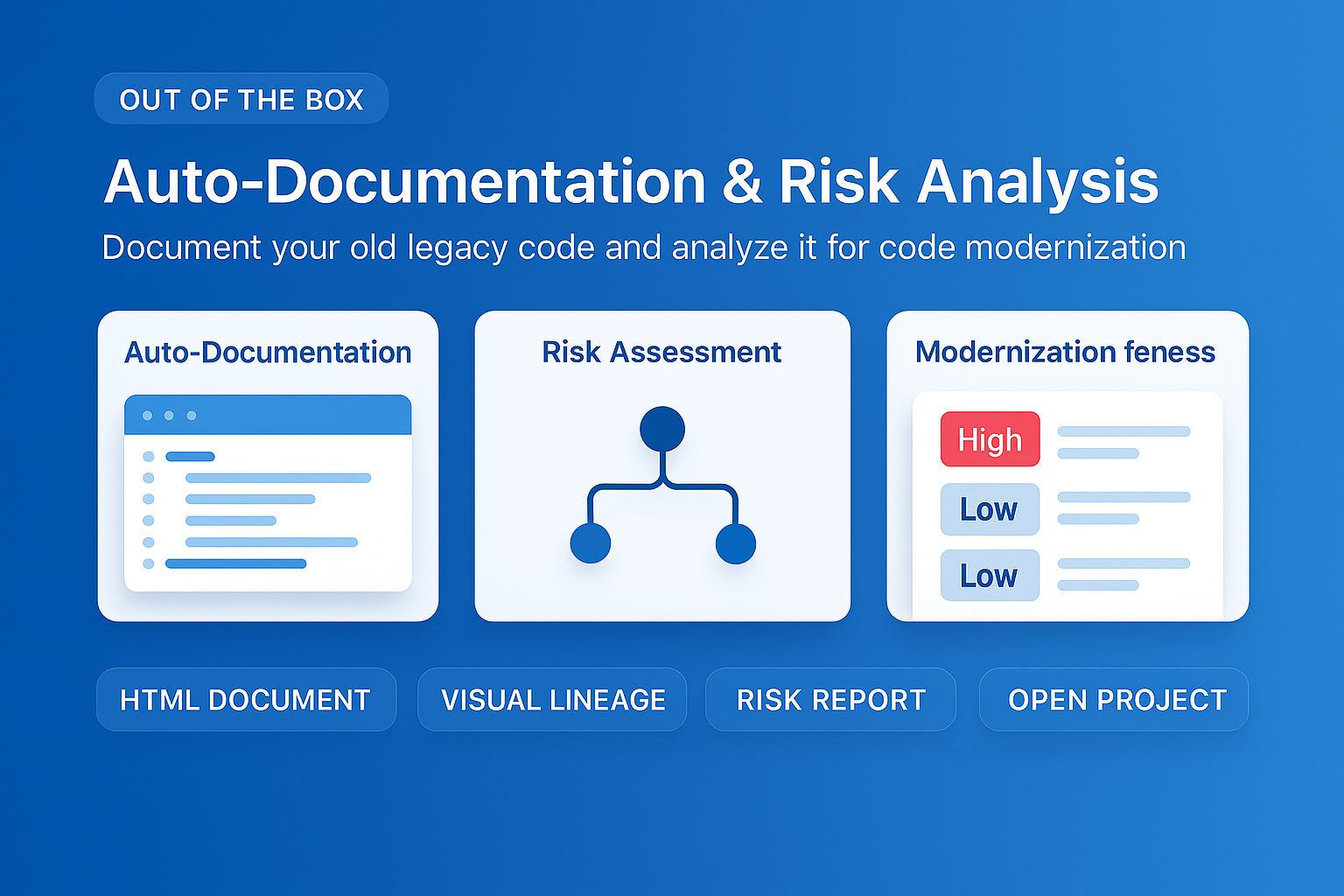

Auto Docs

Automatic documentation captures your legacy code and the new target code, detailing working components, parameters, and dependencies for clear traceability.

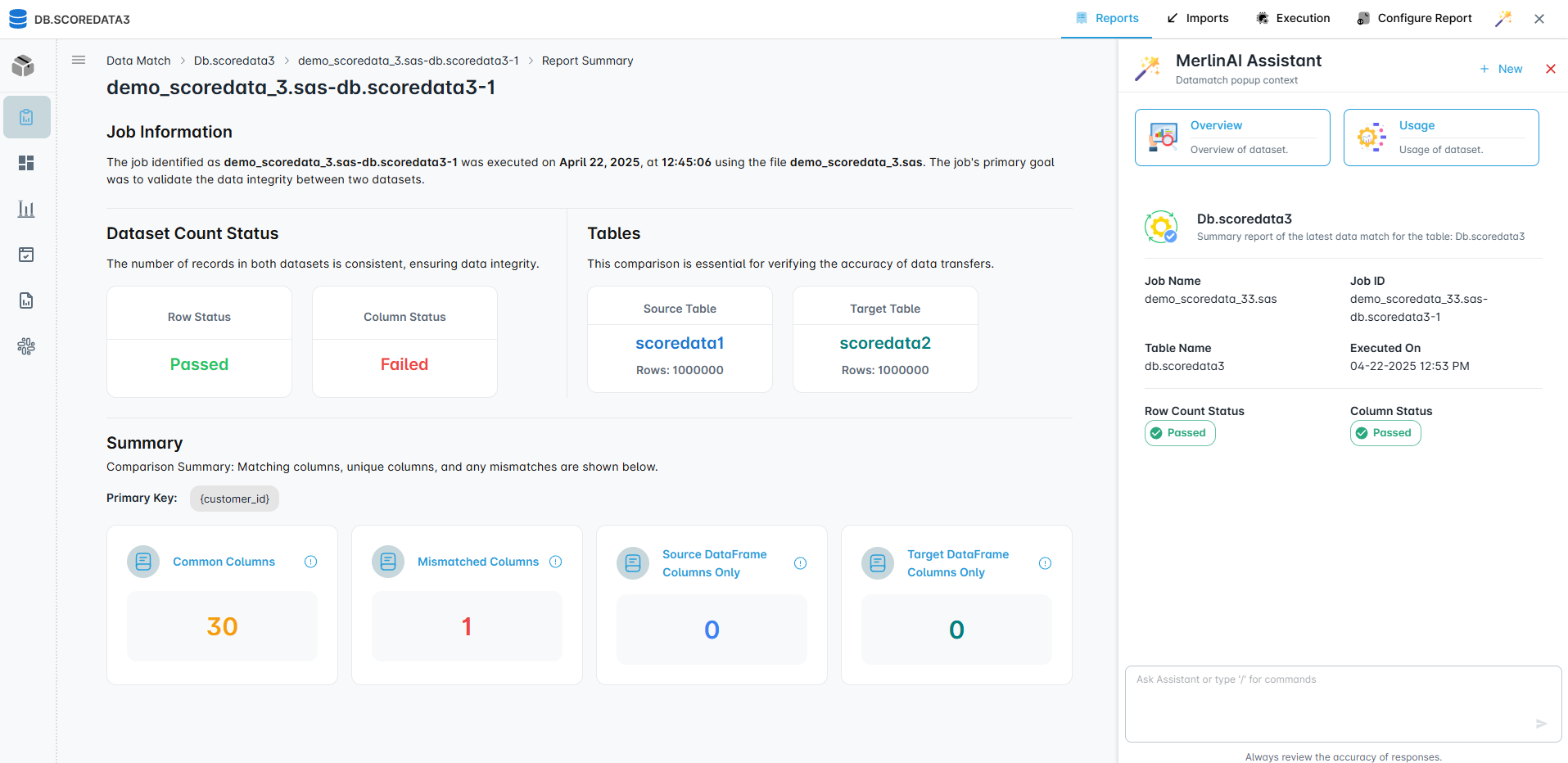

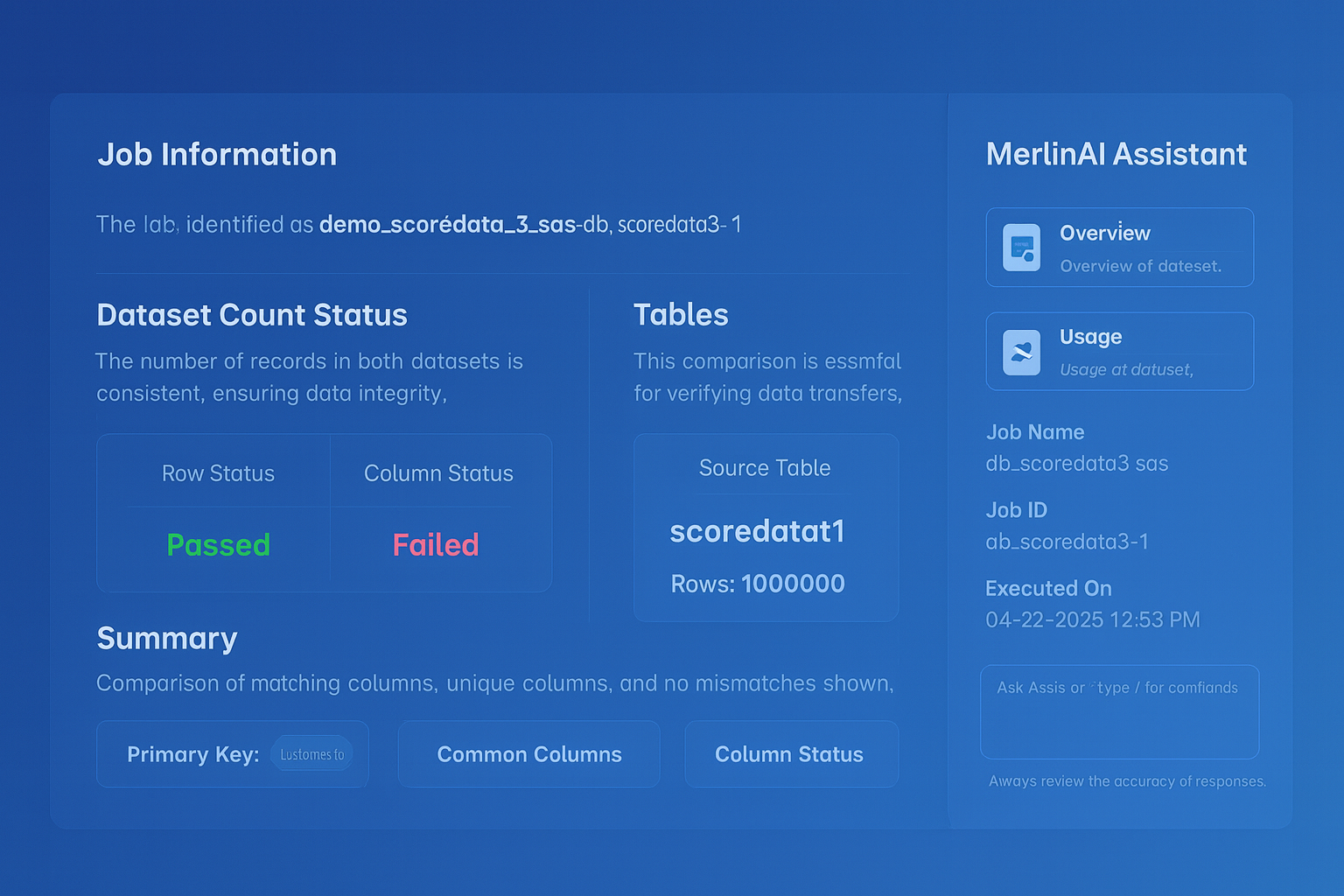

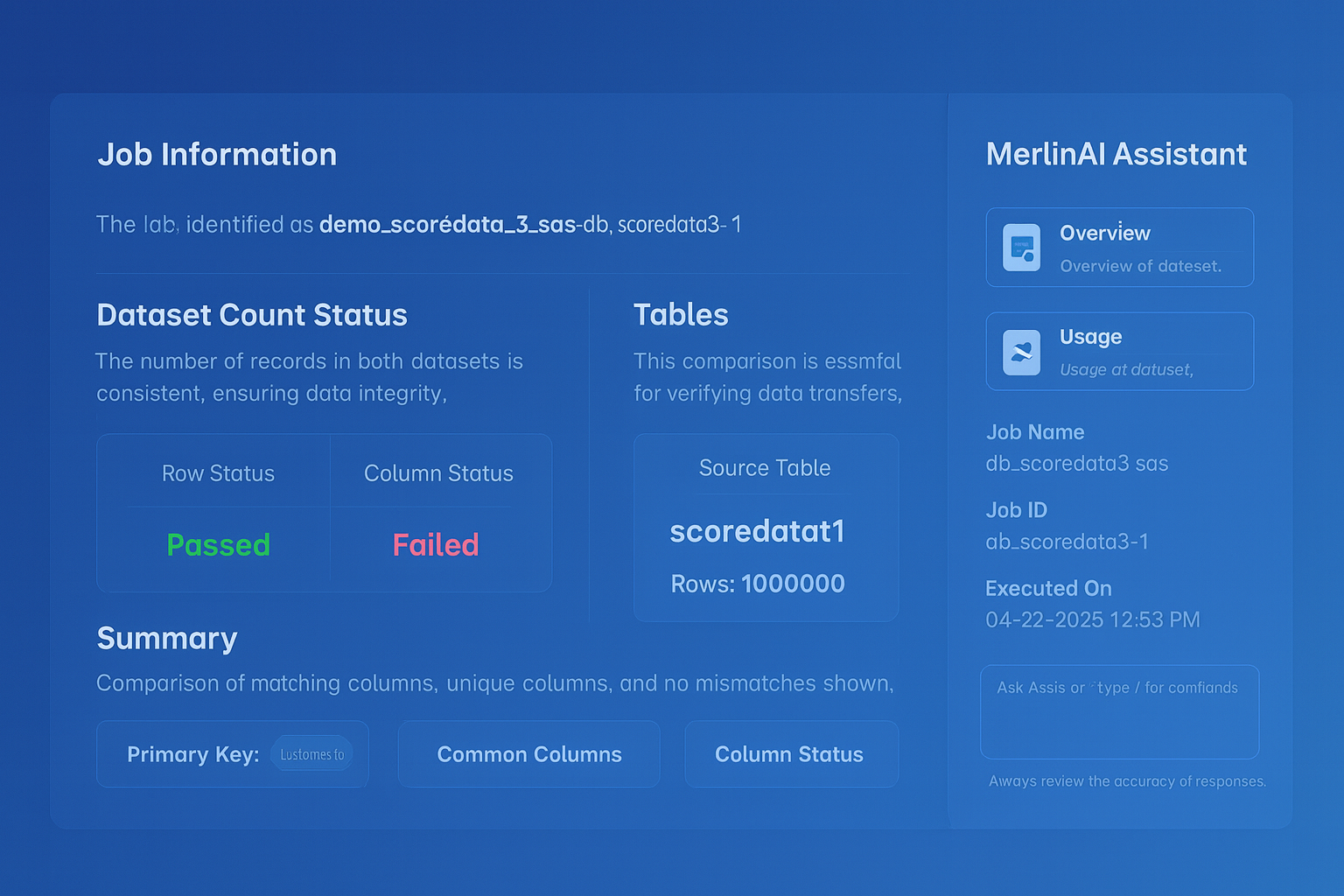

Data Matching

Compares source and target outputs at scale using configurable keys and rules. Flags mismatches, duplicates, and gaps with actionable reports for fast fixes.